The usage instructions and model are currently being organized and will be available soon.

Abstract

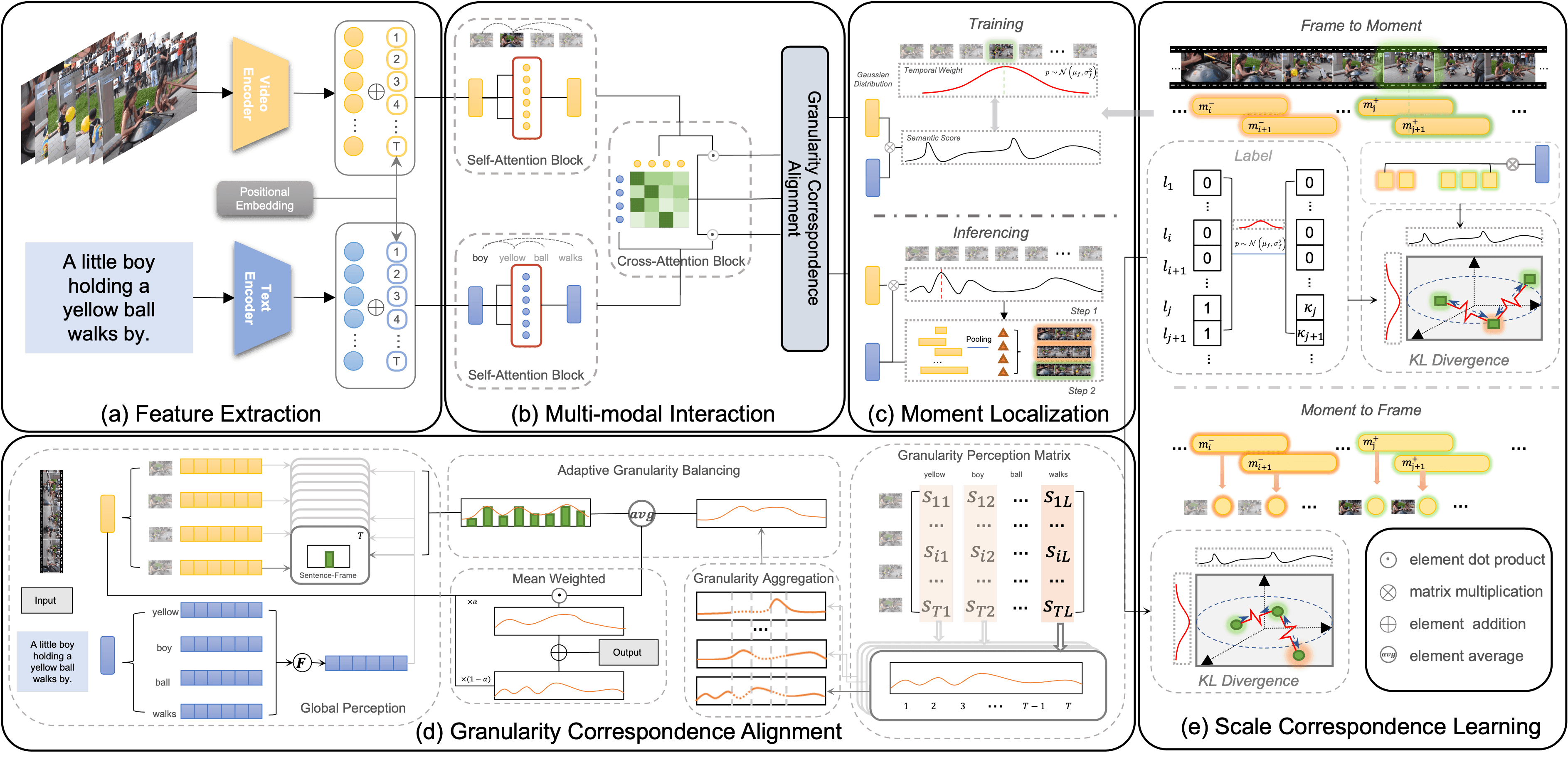

Video moment localization (VML) aims to identify the temporal boundary semantically matching the given query. Compared to other paradigms, point-supervised VML balances localization accuracy and annotation cost. However, it is still in its infancy due to explicit granularity alignment and implicit scale perception. To this end, we propose a Semantic Granularity and Scale Correspondence Integration (SG-SCI) framework aimed at leveraging limited single-frame annotation for correspondence learning. It explicitly models semantic relations of different feature granularities and adaptively mines the implicit semantic scale, thereby enhancing feature representations of varying granularities and scales. SG-SCI employs a granularity correspondence alignment module to align semantic information by leveraging latent prior knowledge. Then we develop a scale correspondence learning strategy to identify and address semantic scale differences. Extensive experiments on benchmark datasets have demonstrated the promising performance of our model over several state-of-the-art competitors.

BibTeX

@inproceedings{wang2024explicit,

title={Explicit Granularity and Implicit Scale Correspondence Learning for Point-Supervised Video Moment Localization},

author={Wang, Kun and Liu, Hao and Jie, Lirong and Li, Zixu and Hu, Yupeng and Nie, Liqiang},

booktitle={Proceedings of the 32nd ACM International Conference on Multimedia},

pages={9214--9223},

year={2024}

}