Semantic Collaborative Learning for Cross-Modal Moment Localization

Abstract

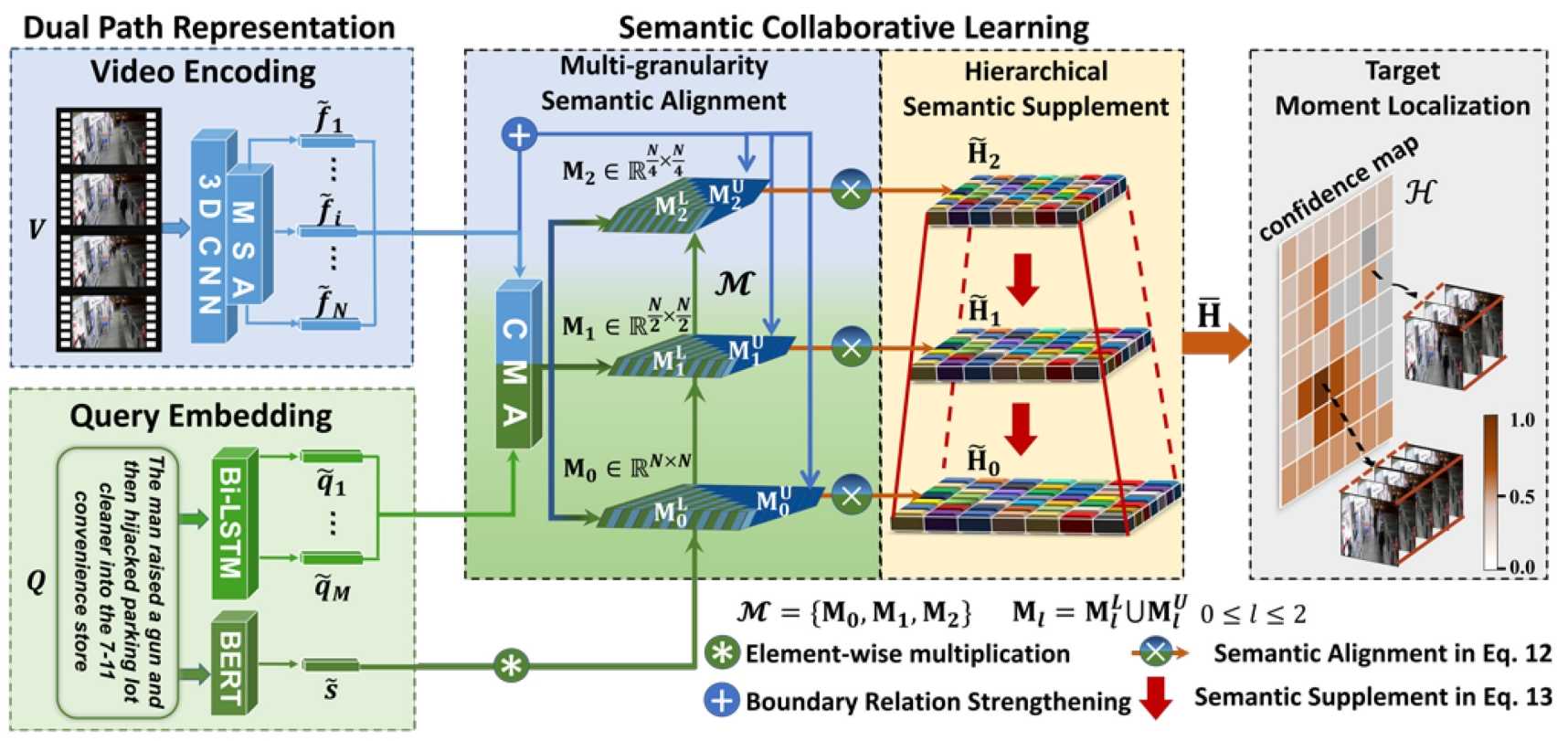

Localizing a desired moment within an untrimmed video via a given natural language query, i.e., cross-modal moment localization, has attracted widespread research attention recently. However, it is a challenging task because it requires not only accurately understanding intra-modal semantic information, but also explicitly capturing inter-modal semantic correlations (consistency and complementarity). Existing efforts mainly focus on intra-modal semantic understanding and inter-modal semantic alignment, while ignoring necessary semantic supplement. Consequently, we present a cross-modal semantic perception network for more effective intra-modal semantic understanding and inter-modal semantic collaboration. Concretely, we design a dual-path representation network for intra-modal semantic modeling. Meanwhile, we develop a semantic collaborative network to achieve multi-granularity semantic alignment and hierarchical semantic supplement. Thereby, effective moment localization can be achieved based on sufficient semantic collaborative learning. Extensive comparison experiments demonstrate the promising performance of our model compared with existing state-of-the-art competitors.

BibTeX

@article{hu2023semantic,

title={Semantic collaborative learning for cross-modal moment localization},

author={Hu, Yupeng and Wang, Kun and Liu, Meng and Tang, Haoyu and Nie, Liqiang},

journal={ACM Transactions on Information Systems},

volume={42},

number={2},

pages={1--26},

year={2023},

publisher={ACM New York, NY, USA}

}